Netsettings were always a very complex and hot discussed topic in Counter-Strike and there is still a lot of misunderstanding about the netcode in CS:GO. We will try to enlighten the whole netsettings debate and explain you which netsettings you should use for competitive play.

Recommended Netsettings

Straight to the point – these are our recommended netsettings for usual high-speed internet (DSL6000+). They are optimized for competitive play on 128 tick servers. However, your netsettings will be automatically adapted to Valve’s official Matchmaking servers, which only use 64 tick.

rate "786432"

cl_cmdrate "128"

cl_updaterate "128"

cl_interp "0"

cl_interp_ratio "1"

Explaination of the config variables

- rate “786432” (def. “196608”)

- cl_cmdrate “128” (def. “64”, min. 10.000000 max. 128.000000)

- cl_updaterate “128” (def. “64”)

- cl_interp “0” (def. “0.03125”, min. 0.000000 max. 0.500000)

- cl_interp_ratio “1” (def. “2.0”)

Max. bytes/sec the host can receive data

Max. number of command packets sent to server per second

Number of packets per second of updates you are requesting from the server

Sets the interpolation amount (bounded on low side by server interp ratio settings)

Sets the interpolation amount (final amount is cl_interp_ratio / cl_updaterate)

In September 2016 Valve updated some of the networking aspects of CS:GO and increased the default rate from “80000” to “196608”, which will accommodate users with internet connections of 1.5 Mbps or better. They also increased the max rate setting up to “786432” for users with at least 6 Mbps. If you have 6 Mbps or more bandwidth, you should use the new max rate of “786432” to allow CS:GO to pass more traffic from the server to your system.

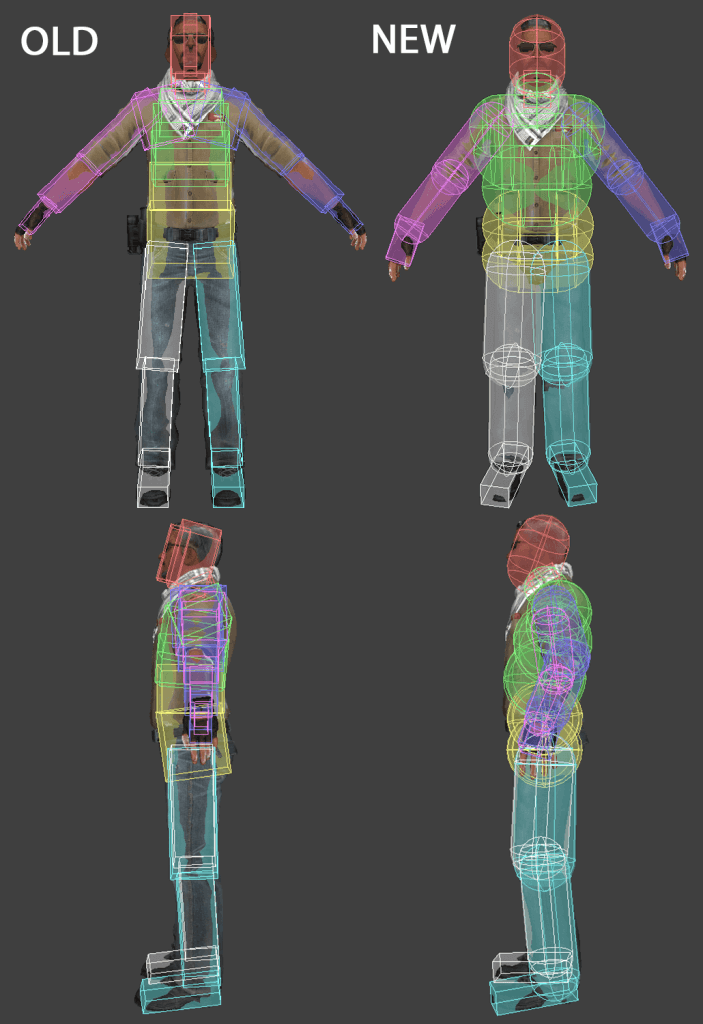

Old versus New Hitboxes

On 15th September 2015 Valve released an update, which improved the old player skeleton and hitbox system in CS:GO. They also replaced all player animations to get rid of some really annoying bugs. The reddit user whats0n took a look into the model files and published a very nice image comparison between the old and new hitbox system.

As you can see, the new hitboxes are capsule-based. The capsules are able to fill out the player models slightly better, so it’s a little bit easier to hit the enemy. Together with the new player skeleton and the reworked animations, this update also fixed a bunch of annoying bugs e.g. the hitbox bug while someone was jumping, planting the bomb or moving on a ladder.

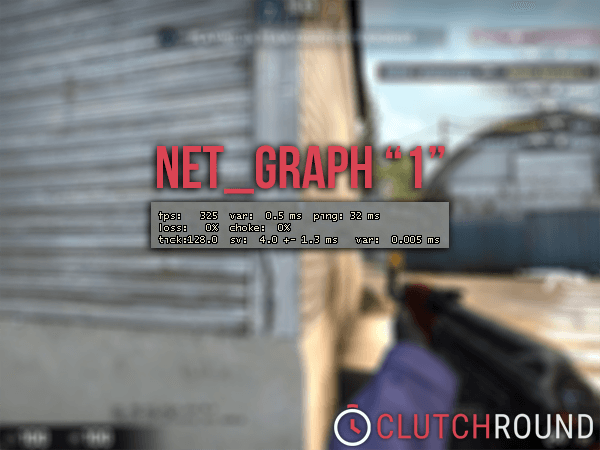

Official explaination of the new net_graph

The following questions were answered by Vitaliy Genkin (Valve employee) via csgomailinglist, after they decided to restrict all net_graph values above 1 in April 2014.

Value of “sv” shows how many milliseconds server simulation step took on the last networked frame.

What does the +- next to the sv represent?

Value following sv +- shows standard deviation of server simulation step duration measured in milliseconds over the history of last 50 server frames.

What does the current value for var represent?

Value for sv var when server performance is meeting tickrate requirements represents the standard deviation of accuracy of server OS nanosleep function measured in microseconds over the history of last 50 server frames. The latest update relies on it for efficiently sleeping and waking up to start next frame simulation. Should usually be fractions of milliseconds. Value for client var near fps net graph display is showing standard deviation of client framerate measured in milliseconds over the history of last 1000 client frames. By using fps_max to restrict client rendering to maintain a consistent fps client can have framerate variability at a very low value, but keep in mind that system processes and 3rd party software can influence framerate variability as well.

Originally, it was considered respectable to have a var of less than 1, reasonable to have it spike as high as 2, but pretty much horrible to have a variance remain above 2 for any length of time. What would be the equivalent values for the three new measurements (sv, +-, and var)?

For a 64-tick server as long as sv value stays mostly below 15.625 ms the server is meeting 64-tick rate requirements correctly. For a 128-tick server as long as sv value stays mostly below 7.8 ms the server is meeting 128-tick rate requirements correctly. If standard deviation of frame start accuracy exceeds fractions of millisecond then the server OS has lower sleep accuracy and you might want to keep sv simulation duration within the max duration minus OS sleep precision (e.g. for a 64-tick Windows server with sleep accuracy variation of 1.5 ms you might want to make sure that server simulation doesn’t take longer than 15.625 minus 1.5 ~= 14 ms to ensure best experience).

Simplified & Summarized

Client-side:

fps var: low value = good

fps var: high value = bad

Server-side:

64 tickrate: sv < 15.625ms = good

64 tickrate; sv > 15.625ms = bad

128 tickrate: sv < 7.8ms = good

128 tickrate; sv > 7.8ms = bad

What is the difference between 64 and 128 tick servers?

Usually you can say the higher the tickrate, the more precise the simulation will be as the server is processing the data faster. This results in a better gameplay experience (more precise movement and hit-detection), because the server and the client are updating each other with a higher frequency.

Of course this is very simplified, but to understand the advantage of a higher tickrate, you firstly need to understand the basics of multiplayer networking within the Source Engine. Valve is running an official wiki with some good explanations how the netcode works in CS:GO. We could summarize this information with our own words, but we feel like you should read the official words from Valve:

The client and server communicate with each other by sending small data packets at a high frequency. A client receives the current world state from the server and generates video and audio output based on these updates. The client also samples data from input devices (keyboard, mouse, microphone, etc.) and sends these input samples back to the server for further processing. Clients only communicate with the game server and not between each other (like in a peer-to-peer application).

Network bandwidth is limited, so the server can’t send a new update packet to all clients for every single world change. Instead, the server takes snapshots of the current world state at a constant rate and broadcasts these snapshots to the clients. Network packets take a certain amount of time to travel between the client and the server (i.e. the ping time). This means that the client time is always a little bit behind the server time. Furthermore, client input packets are also delayed on their way back, so the server is processing temporally delayed user commands. In addition, each client has a different network delay which varies over time due to other background traffic and the client’s framerate. These time differences between server and client causes logical problems, becoming worse with increasing network latencies. In fast-paced action games, even a delay of a few milliseconds can cause a laggy gameplay feeling and make it hard to hit other players or interact with moving objects. Besides bandwidth limitations and network latencies, information can get lost due to network packet loss.

If you are interested to dip even deeper into the netcode of CS:GO, we highly recommend you to watch the “Netcode Analysis” from Battle(non)sense. He greatly visualized the basics of a netcode in online games and also measured the delays in CS:GO and compared them to other games.